Hey Champions! Vespers here!

At Warp Academy, it’s my intention to seek out the most experienced professionals in their respective fields. Specifically when it comes to mastering engineers who specialize in electronic music, I can think of no one better than Warp Academy’s, Erik Magrini (aka. Tarekith). Erik is my own personal mastering engineer, and I recently reached out to him to inquire about mastering best practices. Erik delivered the goods and has provided some deep insights on the ever evolving landscape that is the vast world of mastering. Here is Erik!

“Over the last few years there’s been some dramatic shifts in the field of audio mastering, changing the landscape of how we prepare and process music in that final stage before release. Beginning with the move from physical products like CDs, cassettes, and to some extent, vinyl, to the wider embrace of digital streaming by the general public, the tools and methods for finalizing your songs has undergone some rapid changes. Warp Academy has asked me to talk a little bit about some of the current trends, and explain how these can impact the way your own music is heard.

LUFS (Loudness Units Full Scale)

By far the most dramatic change in recent years is the move towards online streaming as a primary means of both promotion and distribution of music. And by streaming I don’t just mean the obvious choices like Spotify, Pandora, or Sirius, but also (and potentially more importantly) online video channels like YouTube and Vimeo.

Just like with FM radio, it didn’t take long for broadcasters to realize that playing back to back songs from different artists and albums would lead to sometimes drastic jumps in volume between songs. In the old days of FM, this was addressed primarily by compressing the quieter songs to bring them up to the same volume as the louder songs for a more consistent volume across all tracks. And they could get away with this usually, because back then the vast majority of songs had plenty of dynamic range to work with, so some compression could be transparent, or at least not always harmful to the music.

Today that same approach doesn’t work however. As producers have gained the tools to make their songs louder and louder over the years, the concept of dynamic range as a good thing slowly disappeared. Enter the much talked about “loudness wars”. So when it comes to keeping the volumes of streaming audio consistent, a new approach was needed, and for that we now use loudness normalization. Most musicians think of normalizing as a way to makes things louder, but in this usage it’s typically doing the opposite by turning down the volume of extremely loud songs to match that of quieter songs.

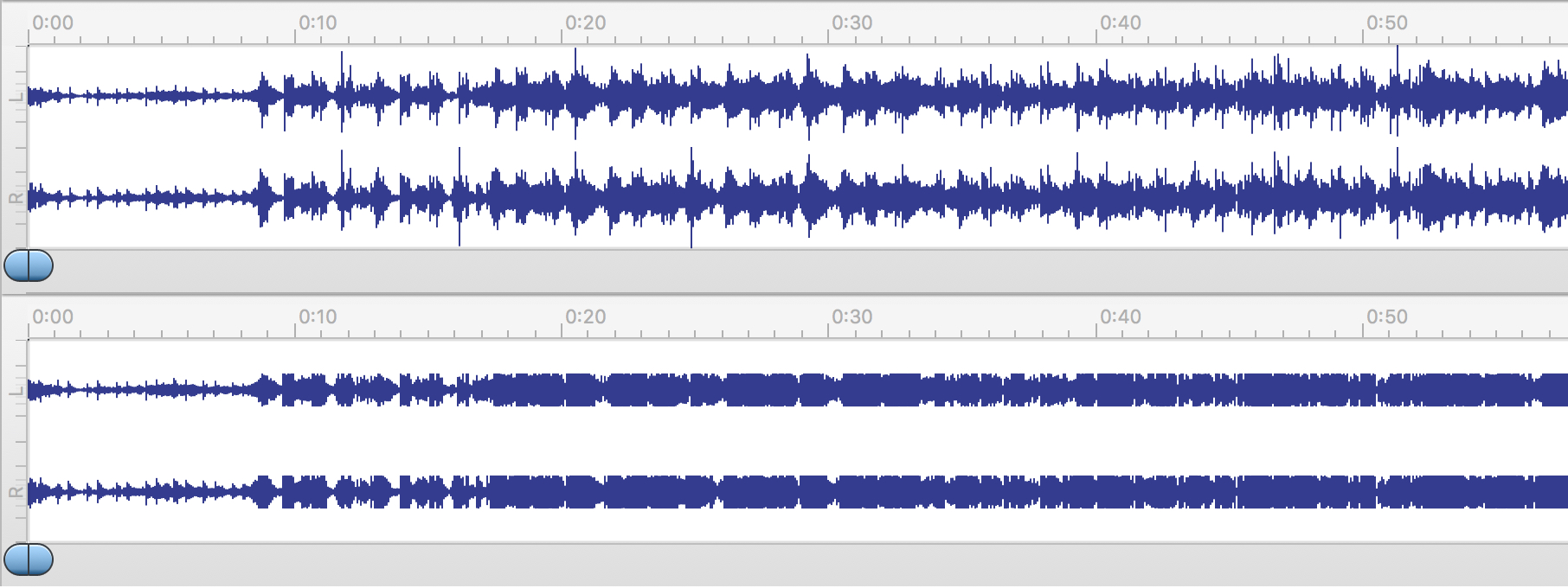

(Figure 1. Both of these songs have the same apparent loudness, but the second track has been turned down considerably to make this happen.)

The net effect of this is that in comparison, songs that have punch and dynamics will tend to have more impact and detail than those that are heavily limited or compressed as in the past. That’s not to say that you can’t still use some compression as a way to glue the sounds of your track together, just that doing it excessively simply for the sake of volume will cause it to potentially sound worse when played back to back with more dynamic songs. The key to achieving the best sound quality under this new paradigm is understanding how the volume of your song is measured for streaming services, and making the best useof this measurement when mixing and mastering your song. And for that, we need to talk about LUFS.

LUFS stands for Loudness Units Full Scale, and is a way of measuring the overall volume of a song in a way that’s much closer to the way the human ear detects volume changes. Many people mistakenly assume that RMS (root-mean-square) metering is intended to do this. While it’s closer than peak metering to helping us judge average loudness, it’s based strictly on a instantaneous mathematical measurement of power, and doesn’t take into account the perceptual ways our ears work over longer and shorter time periods. The LUFS measurement of a song takes into account the overall volume of the entire song, from start to finish, giving you much more accurate way of judging how loud it will sound in comparison to other songs. The algorithm used to make the measurement also takes into account the way our ears can ignore short loud transients, how many channels there are in the audio, and various other factors that RMS does not. If you’re curious about the actual details of this measurement system, the full specification can be accessed here.

So, from a practical standpoint, what does this mean? In short, that we now have a standardized way by which all streaming services (again including YouTube in this category) measure the perceived loudness of your songs and adjust the overall playback volume of it based on this reading. Using a LUFS meter, you can know exactly how loud or quiet your song will be measured while you’re working on it, and make sure you’re releasing it to best take advantage of modern day standards.

The bad news is that not all streaming services agree on what the ideal LUFS value should be. Worse, very few of them actually publish the LUFS values they do use. However with some careful sleuthing and testing, it’s been possible to determine the LUFS values used by a few services:

- Spotify: -14 LUFS

- Youtube: -13 LUFS

- iTunes (streaming and SoundCheck): -16 LUFS

As a result, most songs mastered for streaming will use the conservative value of -16LUFS as a target, to ensure compatibility across the most streaming platforms. In fact, we take it one step further and aim not just for -16LUFS, but also -1TP.

What the heck is TP?

TP in this case stands for True Peak, and is used to measure the actual peak loudness of the file when played back in the analog realm (ie, a speaker). Most digital peaking meters like those in your DAW are actually “quasi-peak” meters, in that they have an integration time of around 10ms, which means that extremely short transients may not be accurately measured. In addition, they don’t take into account things like inter-sample modulation distortion that might occur when you have multiple samples in a row at 0dBFS that are converted back to analog by your DAC (i.e. your soundcard). A True Peak meter takes these things into account so you can be absolutely sure that your audio will not be clipping at any point during playback. The good news is that almost all LUFS meters will also measure TP, so you don’t need a different tool for this measurement.

(Figure 2. The LUFS metering from DMG Audio’s Limitless plug-in. The TP measurement is correctly labeled dBTP, and is below the LUFS readout.)

So now we know what we’re aiming for, how does this impact the way we master our songs? In general much of the process is the same, you can still apply any EQ you need on the entire song, and there’s nothing wrong with a bit of compression if you feel things are a touch too dynamic. The real difference will come when it’s time to do the final limiting of the track. No longer do you need to squash things to the point of distortion for the song to be competitive. In fact, doing so will actually end up making your track sound worse compared to other songs when stream!

At this point you’re instead going to be applying only as much limiting as is needed to hit that -16LUFS value, and this will vary depending on the song itself. Some songs might not need any limiting at all! You’re also going to be keeping the limiter’s final output set so that the TP reading never goes above a reading of -1TP. A heavily compressed and limited song might have a TP reading much lower than -1TP when it’s at -16dBFS in fact. That’s ok as it’s the LUFS value that we’re aiming for, not the TP value (provided TP never goes above -1dB). It’s also important to remember that these measurements need to take into account the entire song, so you have to playback the entire song to get an accurate measurement (some tools like Audiofile’s Myriad or Triumph have an offline analysis mode to speed this up).

Usually at this point, producers start to freak out a little because the volume of their song is MUCH lower than they’re used to hearing, especially if you’re making electronic music. That’s ok! The whole point of the new standard is that we no longer need to squash things just for the sake of volume, your song will be just as competitive as a louder one but will retain much more detail now. Just turn up your monitors more while you’re mastering to -16LUFS to compensate if you want to listen to it nice and loud.

(Figure 3. A comparison of the same song mastered with -16LUFS as the target, and below it a version mastered by today’s heavily-limited techniques. They will be the same volume to the end listener, but the lower version loses a lot of transients and details and will sound dull or flat by comparison.)

At the same time, I’m a realist. I recognize that there’s a lot of outlets our music will be played on (Beatport, DJ blogs, CDs, etc) that don’t yet follow these loudness normalization standards, and for those places submitting a track at -16LUFS will likely cause some issues. For my clients I often suggest we do two masters, one at the newer streaming standards to post on YouTube, send to the aggregator of our choice like CDBaby, etc. And then I’ll create a second master that’s been processed to achieve the volumes we’ve been used to up until now so that they have something for more traditional playback options like CD.

This way you get the best of both worlds, a nice dynamic master that will sound great on the streaming options we have now (or future playback options we don’t even know about yet!), and a loud master for those times you need something that has a loudness most people are used to. It’s the best of both worlds, and allows you to submit the version that works best in any situation you might run into.

MP3 Is Dead

The other big news I want to talk about is the recent announcement that MP3 is dead. Or at least that’s what click-bait driven websites and news outlets would have us believe. The real story is that the MP3 licensing patents have expired, so in effect it’s not dead, it’s just free for developers to implement now rather than having to pay a fee like in the past.

But what IS interesting about this recent news is that it’s bringing to light for many people the fact that for 20 years now MP3 has actually been outdated, and that audio compressed using the AAC standard is it’s successor. Developed by the same people who created the MP3 standard (technically AAC is part of the MP4 specifications, usually with the file extension “.m4a”), the AAC standard was created to replace MP3 way back in 1997! In fact it’s already been recognized as the standard format for compressed audio by both the International Standards Organization and the International Electrotechnical Commission.

There’s a misconception by many people that AAC is something Apple came up with, since all of the songs we buy in iTunes are in that format. In reality, Apple was only following the standard as laid out by the above international organizations. AAC is also the default compressed audio format for companies like Sony, YouTube, Google, Blackberry, and more for this same reason.

So, what makes AAC better than MP3? For one thing the encoding itself is not only faster in general, but more accurately represents the non-compressed version. This is especially true at lower bitrates, but in my listening tests I’ve found the same to be true at bitrates as high as 320kbps. It also supports multi-channel audio, even up to 48 uncompressed channels, which is what allowed Native Instruments to use AAC for their new Stems format. It has more robust tagging, error-correction, and metadata options, supports higher sample-rates up to 96kHz (MP3 technically only supports up to 48kHz), and works much better at encoding frequencies above 16kHz.

In fact the only downside I’ve found to AAC is that some older versions of Firefox won’t play them. That’s it. Otherwise every other device or software that will play MP3s will play back AAC files too. So while it’s easy to just keep using what we already know, I am more and more trying to steer my clients to using AAC files instead of MP3s. And remember, the same people that created MP3 are the same ones who created AAC to replace it, it’s not a competing standard, it’s an updated version of the same one.

The easiest way to create AAC compressed files is with iTunes, which most of us have installed on our computers even if we don’t like it. Apple goes to great lengths to ensure that their AAC encoding is the best around, even going so far as to create the “Mastered For iTunes” program to allow mastering engineers access to tools to verify their masters will convert to the compressed format as cleanly as possible.* If you don’t want to convert your files with iTunes, most wave editors and DAWs these days will export to AAC as well, though sadly this is not present in Ableton Live as of yet.

(Figure 4. The settings for iTunes AAC conversion can be found in Preferences -> General -> Import Settings.)

Apple and most other online music retailers tend to use bitrates of 256kbps for their compressed files, which in general are equal to the quality obtained by a 320kbps MP3 file. I create 320kbps AAC files myself, the file size is not much bigger and it’s good to have a bit of future proofing in place on the chance these companies switch to the higher bitrate in the future.

So while there’s nothing wrong with continuing to use MP3s, and in fact there are some great sounding MP3 conversion algorithms out there, the truth is that it’s a standard that’s already been superseded for many years now. It’s time musicians and producers get on board with the current standard as it offers almost nothing in terms of downsides, and potentially can offer us a lot more going forward. At the very least it can offer you a compressed format that sounds closer to your original uncompressed masters than ever before, and who wouldn’t want that?

I hope this quick primer on some of the more recent changes in mastering your music has helped. Feel free to reach out to me via the links below if you have any questions though, I’m always happy to help!”

-Erik Margini (a.k.a. Tarekith): http://tarekith.com

Inner Portal Studio: http://innerportalstudio.com

*For the details of the ‘Mastered For iTunes’, please read this article.

About The Trainer

Erik Magrini (a.k.a. Tarekith) is the owner of Inner Portal Studio, a Seattle-based facility providing professional mastering for musicians around the world for over 16 years. In addition to his experience doing mastering and mixdowns, over the last 24 years he’s also written music for TV commercials, worked as a product tester for some of the largest instrument and software manufacturers (Ableton, Roland, Access, E-mu, Audiofile Engineering), been voted a “Future Sound Of Chicago” DJ by Shure, and run live sound for Seattle’s Decibel Festival as well as raves and clubs across the midwest of the United States. He is also an active musician and live performer as well!

Erik Magrini (a.k.a. Tarekith) is the owner of Inner Portal Studio, a Seattle-based facility providing professional mastering for musicians around the world for over 16 years. In addition to his experience doing mastering and mixdowns, over the last 24 years he’s also written music for TV commercials, worked as a product tester for some of the largest instrument and software manufacturers (Ableton, Roland, Access, E-mu, Audiofile Engineering), been voted a “Future Sound Of Chicago” DJ by Shure, and run live sound for Seattle’s Decibel Festival as well as raves and clubs across the midwest of the United States. He is also an active musician and live performer as well!